Tensorlog

15 Oct 2019 Logic Learning Query answering Neural-SymbolicTensorLog (Cohen et al., 2017) is an implementation of probabilistic knowledge bases that (i) leverages deep learning to answer queries efficiently, by (ii) restricting the first order logic language to a class called p-tree-DKG, and (iii) learns weights on knowledge base facts.

How

The paper explains that the problem with past approaches is that they rely on grounding first order clauses (either for training or answering queries). For example, given a (Prolog-like) rule:

\[p(X,Y) \text{ :- } q(Y,Z),r(Z,Y)\]Representing the formula:

\[\forall X \forall Y \ (p(X,Y) \lor \neg (q(Y,Z) \land r(Z,Y)))\]And a logic vocabulary with a set of constants \(\mathcal{C}\) of size \(n\), the amount of clauses generated by the grounding of this clause would be \(n^3\), as each variable can be replaced by any constant. It becomes apparent that with larger amounts of variables in the clause, grounding them can quickly become untractable.

Let us quickly define the following: a database is a set of ground facts \(\mathcal{DB} = \{f_1, ..., f_N\}\), and a theory \(\mathcal{T}\) is as a set of Horn clauses (Prolog like), which can also be seen as knowledge base rules (as in the example above).

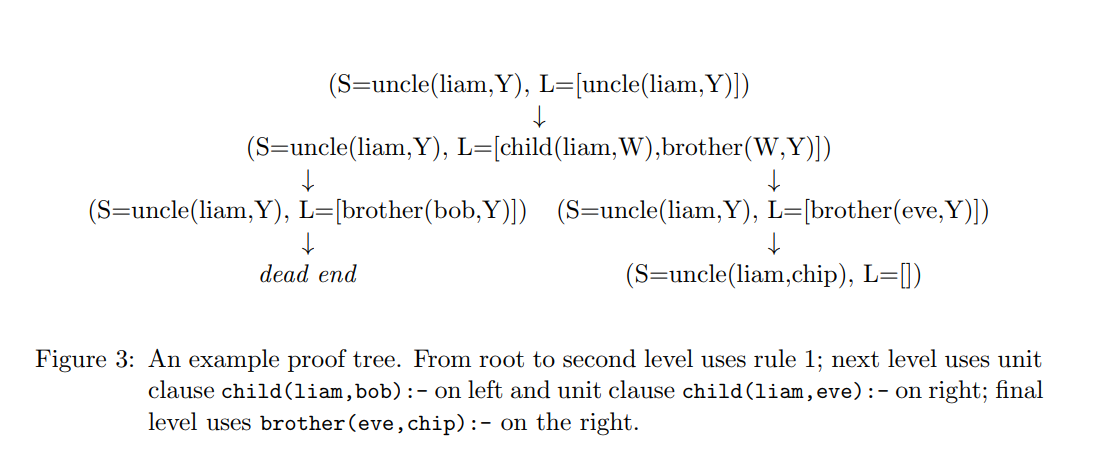

The proof methodology here is backwards chaining i.e. top-down unification of literals through rules. Then, a proof tree for a query \(Q\) is a graph where nodes contain tuples (S,L) consisting of a solution S (to a query or a successive unification state) and a list L of (non-grounded) literals to unify, where \((Q, [ Q ])\) is the root.

Now, in order to incorporate probabilistic reasoning to our scheme, we have to assign weights to our facts and rules and provide an extension for query answering through proof trees.

A stochastic logic program (SLP) is a tuple \((\mathcal{DB}, \mathcal{T}, \Theta)\) where \(\Theta\) maps literals and rules to non-negative real numbers. Given a query \(Q\) with proof graph \(G\) and an answer \(f\), the weight of \(f\) with respect to \(Q\) is:

\[w_Q(f) = \sum\limits_{v_0=(Q,[Q])\rightarrow ... \rightarrow v_n=(f,[])} \prod\limits_{i=0}^{n-1} \Theta_{r_{v_i,v_{i+1}}}\]Where \(r_{v_i,v_{i+1}}\) is the rule used between nodes \(v_i\) and \(v_{i+1}\) in \(G\).

Then, we can normalize all the answers \(\{ f_1,...,f_k \}\) to the query as:

\[P(f_i | Q) = \frac{w_Q(f_i)}{\sum\limits_{j=1}^{k} w_Q(f_j)}\](Theorem 1) Computing \(P(f|Q)\) for all possible answers \(f\) of the query \(Q\) is #P-hard, even if there are only two such answers, the theory contains only two non-recursive clauses, and the knowledge graph contains only 13 facts.

To bypass this, the authors propose a series of restrictions that lead to more efficient query answerings.

A polytree-limited stochastic deductive knowledge graph (ptree-SDKG) is a SLP where:

- \(\mathcal{DB}\) and \(\mathcal{T}\) have no function symbols.

- \(\mathcal{DB}\) and \(\mathcal{T}\) have only unary and binary predicates.

- Theory \(\mathcal{T}\) clauses have weight 1, i.e. \(\forall r \in \mathcal{T}, \ \Theta(r)=1\).

- Theory \(\mathcal{T}\) clauses are not recursive.

- Theory \(\mathcal{T}\) clauses \(r = A \text{ :- } B_1, ... , B_k\) satisfy that, given a graph with nodes \(B_i\) and edges \((B_i,B_j)\) where \(i \neq j\) for each pair of predicates that share a variable, then the graph has no cycles (polytree restriction).

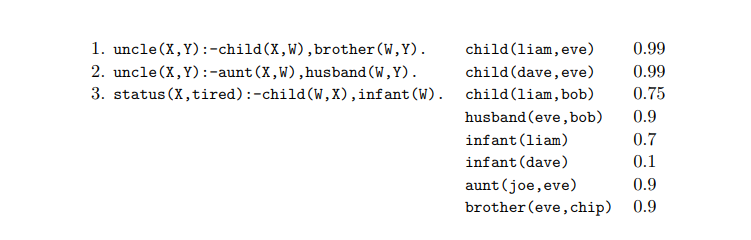

An example:

(Theorem 2) For any ptree-SDKG, \(P(f\mid Q)\) can be computed in linear time in the size of \(\mathcal{T}\) and \(\mathcal{DB}\).

With these restrictions, it holds that:

\[w'_Q(f) = \sum\limits_{v_0=(Q,[Q])\rightarrow ... \rightarrow v_n=(f,[])} \prod\limits_{ \{ f \in \mathcal{DB} \mid f \text{ is used in the path} \} } \Theta_{f}\] \[P(f_i | Q) = \frac{w'_Q(f_i)}{\sum\limits_{j=1}^{k} w'_Q(f_j)}\]Meaning that we can compute the normalized answer to a query \(Q\) only by looking at the facts that were used in the proof graph to unify, as they are the only ones with weight different than 1.

Conclusion

- Identified a family of probabilistic deductive databases called polytree-limited stochastic deductive knowledge graphs (ptree-SDKGs) which are tractable for query answering and learning weights of facts.

- Presented an algorithm for performing inference for ptree-SDKGs.

- Even if the logic language is maximally expressive, it seems highly restrictive, and all it can learn is weights for facts, not rules, then, query answering is efficient because it disallows recursion and imposes further restrictions.

- Implemented the framework, called Tensorlog, which is available in Github.

References

- Cohen, W. W., Yang, F., & Mazaitis, K. (2017). TensorLog: Deep Learning Meets Probabilistic DBs. CoRR, abs/1707.05390. http://arxiv.org/abs/1707.05390