Implicitly Learning to Reason in First-Order Logic

21 Oct 2019 Logic Learning Query answeringThis paper (Belle & Juba, 2019) considers the problem of answering queries about infinitary first order logic (infinite but countable amount of constants) formulas based on background knowledge partially represented explicitly as other formulas, and partially represented as examples independently drawn from a fixed probability distribution.

The authors present an algorithm that reduces reasoning with implicit learning to deciding entailment. Meaning it does not learn rules, but it can answer queries (i.e. resolve entailment) with implicit and explicit learning. They also provide theoretical guarantees if the implicit knowledge is drawn from a probability distribution.

Idea

Probably approximately correct (PAC) learning is a branch of machine learning that studies function inference under polynomial time complexity contraints with respect to the probability of getting an approximately correct answer. More precisely, complexity scales polynomially the more precise the approximation is and the more probable the answer is to lie within that approximation.

The paper starts off by defining a first order logic language with connectives \(\{\neg , \lor , \land , \forall , \exists , \Rightarrow , = \}\) and a countably infinite set of constants, such as \(\mathbb{N}\).

Their knowledge bases are defined as a finite, non-empty set of \(\forall\)-clauses. A \(\forall\)-clause is a formula of the form:

\[\forall x_1 ... \forall x_k \ ( e \Rightarrow a_1 \lor ... \lor a_n )\]Where:

- \(a_i\) are atoms.

- \(e\) is a formula built using: (i) equalities of the form \((x=c)\) with \(x\) variable and \(c\) constant; (ii) the logical connectives \(\{ \neg , \forall , \exists \}\).

- \(x_1 ... x_k\) are all the free variables of \(e \Rightarrow a_1 \lor ... \lor a_n\).

For example:

\[KB = \{ \forall x (Grad(x) \lor Prof(x)) , \ \forall x ((x \neq 1) \Rightarrow Grad(x)) \}\]As such, this knowledge bases are able to represent consistent sets of infinite ground clauses.

The rank of a knowledge base is the maximum amount of universal quantifiers present in any of its clauses.

A model of a KB is a consistent mapping from all possible grounded atoms to \(\{ true , false \}\) that satisfies the formulas of KB. A partial model of model \(M\) of KB is a mapping \(N\) from all possible grounded atoms to \(\{ true , false , * \}\) that is consistent with the model i.e. \(\forall p \ N[p] \neq * \Rightarrow N[p] = M[p]\).

Let \(N\) be a partial model of the KB, a ground formula is witnessed to be true iff it is true in \(N\). Next, a \(\forall\)-clause \(\forall \hat x \theta(\hat x)\) is witnessed true in a partial model \(N\) for the set of constants \(C\) if for every binding of \(\hat x\) to constants \(\hat c \in C\), the resulting ground clause \(\theta(\hat c)\) is witnessed true in \(N\).

Given a distribution \(D\) over all possible models of KB, a masking process \(\Theta\) is a function that maps a distribution over the models of KB, to a distribution over partial models of KB, i.e. \(\Theta (D)\) is a distribution over partial models of KB.

Then, given:

- An explicit KB (given as input, not inferred from examples).

- A set of partial models \(\{ N^{(1)} , ... , N^{(m)} \}\) (sets of possible true grounded atoms) that represent witnessed examples, i.e. sets of grounded atoms that hold together in an example. They would represent the implicit knowledge.

- A distribution \(D\) of \(\{ N^{(1)} , ... , N^{(m)} \}\) masked by a masking process \(\Theta\).

- A query (grounded formula) \(\alpha\).

- A number \(k\) of constants at least as large as the KB’s rank.

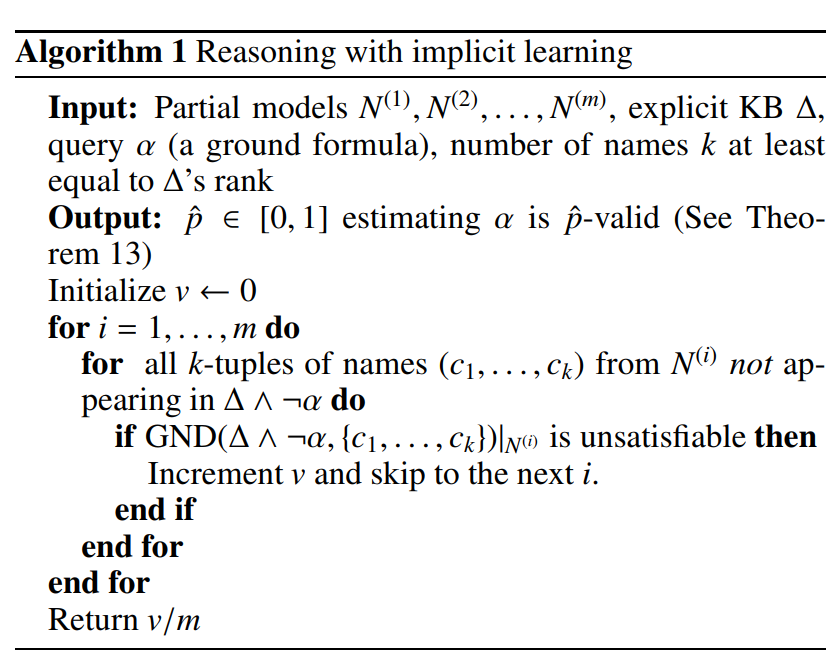

The authors present the following algorithm to estimate the probability \(\hat p\) of the query being right:

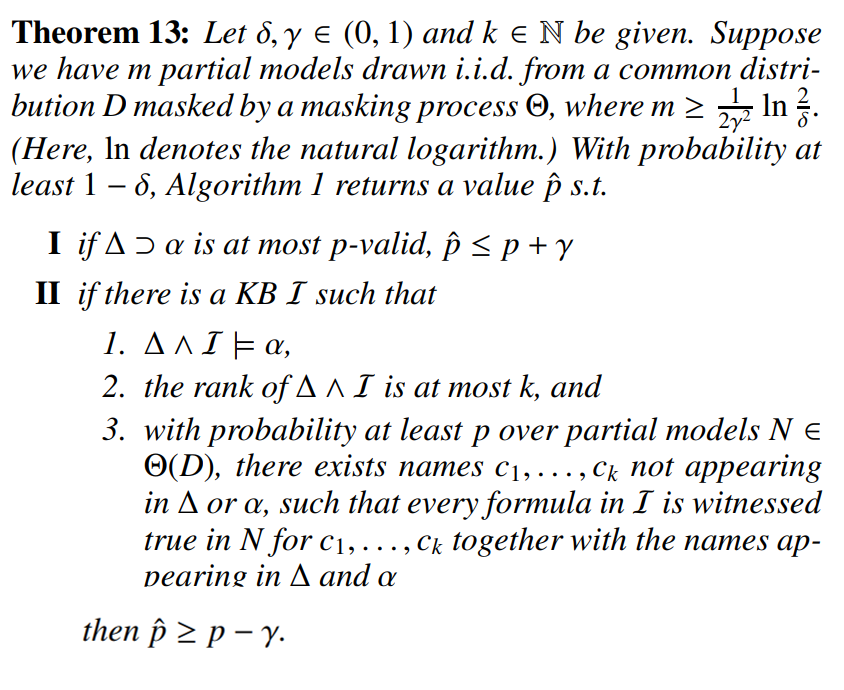

The guarantees of this procedure are given by the theorem:

Where given a distribution \(D\) over \(\{ N^{(1)} , ... , N^{(m)} \}\), a query \(\alpha\) is \(p\)-valid iff:

\(\sum\limits_{ \{ N^{(i)} \ \mid \ N^{(i)} \models \alpha \} }Pr(N^{(i)})) \geq p\).

References

- Belle, V., & Juba, B. (2019). Implicitly Learning to Reason in First-Order Logic. CoRR, abs/1906.10106. http://arxiv.org/abs/1906.10106